As I mentioned earlier, what was supposed to be a one-weekend project has turned into four-months-long fine tuning effort and even now in November still not in excellent shape. In this post I would like to share the lows and highs which one can encounter when writing such on the first sight simple and straightforward application – the ognLogbook.

The first instances false take-off and landing detections were spotted when exercising spins and winch-launches at the LKKO airfield. Detection routines based mainly on ground speed (GS), initial thresholds values and minimal time of flight of 2 minutes (one cannot do a circle faster, right?) were failing when GS sunk down close to 0 km/h after a winch-based take-off, kept at 0 thru the climb and continued right above minimal GS limit during the circle. To make things even more tough those guys and gals also trained emergency landings across the runway making duration of one ‘circle’ flight just around 60 seconds.

Later that week I have noticed the second type of detection problem – GS close to 0 during spins with Blanik somewhere around LKVM. If you do more than one spin it already takes some time which had been evaluated as landing with conseqeunt immediate take-off. This trouble originated mainly from the vaguely defined touch-and-go detection routine.

Another madness had been detected at Krakowski Aeroklub in Poland as shown on the picture above. My hypothesis is they were testing their engine after an overhaul by taking off and landing immediately while still on the runway. The time, speed and altitude difference conditions were met and thus this was also detected as a (short) flight.

And there is even more crazy stories like that! 🙂

Ongoing detection problems made me to consider spawning yet another functionality – calculated flight altitude above ground level (AGL). This became quite tricky as all the available code examples did not work for me (a common situation), hence a bit hacking had to be used. You can get the EU terrain elevation data freely from the ESA’s Copernicus sattelite with 20m horizontal resolution (nice) in tile-files of total size around 20GiB. These had to be resized to tiles 500x500m to reduce its size to some 8MiB. Why? The initial workaround could be seen in calling a command line utility (gdallocationinfo) for every position (well, not every, just those near take-off and landing) by specifying latitude & longitude and parsing resulting output from the proceses’ output stream. This meant to load the (joined) tile-file from the hard-drive (SSD became a life-saver!) into memory every time the script was called and waiting for the process to finish. You can imagine the overhead! A month later I discovered the gdal binary version needs to fit exactly the python library (you need to downgrade in the system) and this enabled every received location’s altitude to be resolved from a more precise (200x200m) file of current size of 160MiB in real-time! Here is still one drawback, however: The elevation data covers only the territory of the European Union. The rest of the world is just dragons..

The most recent problems have arisen recently with the autumn wave season: The winds at FL195 blew so strongly the gliders came to have a negative GS! (greetings and congrats again to Hedlanda aeroclub at ESNC in Sweden! 🙂 ). This made the AGL calculation an indispensable step for every received position from the OGN network.

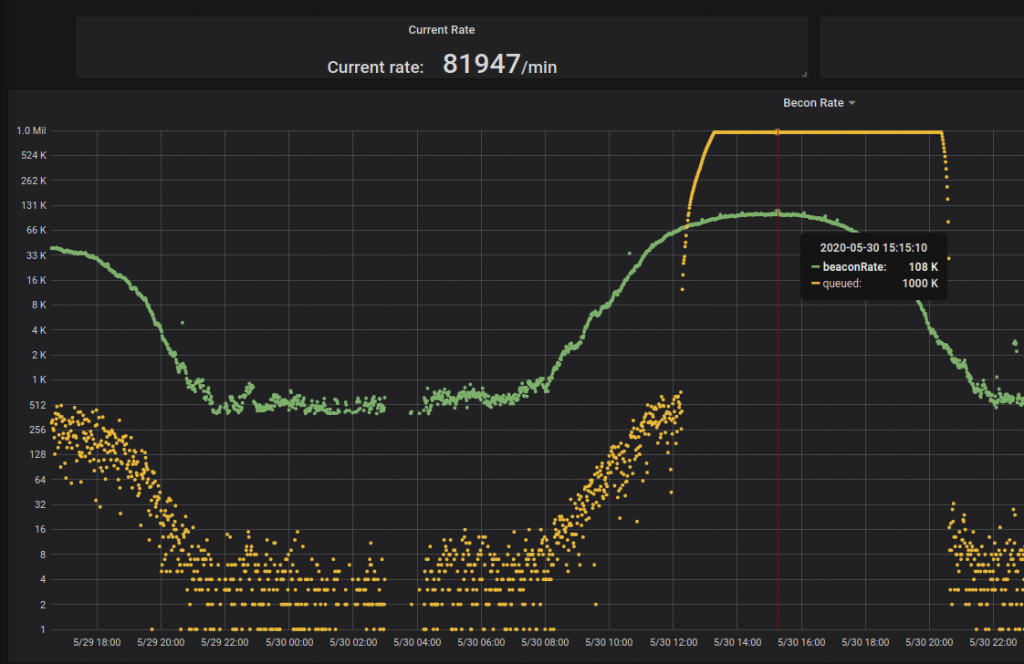

The initial ognLogbook‘s coverage was mere 300 kilometers around LKKA. Early on I started receiving inquiries if the area can be extended to cover this and that airfield so it has risen up to 1000 km with the center still at our hangar mainly due to the server performance issues. At that time the logbook was deployed on ‘CML6’, a machine based on remainder of an broken laptop with the Intel Core i7-3537U CPU @ 2.00GHz. This CPU hit its limit during a sweet summer day when the entire Europe went bananas and every available glider was pulled out of the hangars with a dream of at least 1000-kilometer-long flight 🙂 The last recorded beacon rate was 120000/min. The task queues were configured with ceiling of 1M (a million) records and the records that not fit into were brutally dropped.

But still this wasn’t really the biggest issue. The caches could have been configured as virtually limitless (well, only by total 8GB of RAM) and no data was dropped. Just then the data processing of such extent has taken until early morning hours .. two days later.

It was obvious that the 4 cores of the CPU (two physical) were not enough for five processing threads of the logbook application ( 1 – data ingestion, parsing and sorting, 2 – OGN beacon processor, 3 – FLARM beacon processor, 4 – ICAO beacon processor, 5 – maria db insertion queue and 6 – influx db insertion queue). The last one was only possible after migration to more powerful virtual server sporting four physical Intel Xeon Silver 4214 @2.20GHz cores allocated to just to our gorgeous ‘CML7’.

As long as the generously provided virtual machine is running we have a stable hive to process and further tinker with our OGN data. Currently, the entire flight record of every detected flying craft is stored into the influx for more detailed processing. Nonetheless, not for eternity – the retention period is configured for 7 days and then the data is discarded. The main reason is the tremendous amount of data: approximately 800 million records per week were stored at the and of August 2020.

There is still a lot of work that could be done – both in the realm of the data processing algorithms and also in the web interface (e.g. responsiveness, live data feed and more). Or rewriting into rust? If you were willing to pass a helping hand or can’t stand some issues you can see, you are warmly welcomed to contribute to the GitHub repository https://github.com/ibisek/ognLogbook. Any enhancements will surely be appreciated by everyone! 🙂