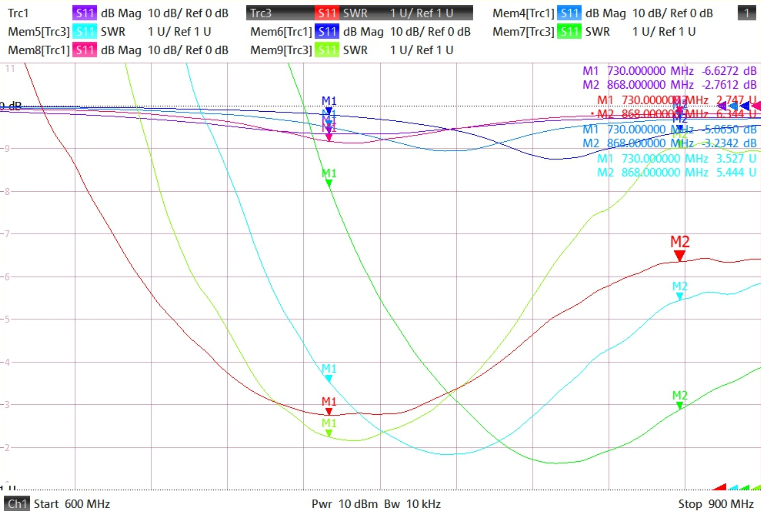

This write-up was supposed to come in February 2020 but some other, “more important causes” made me to reschedule a bit, well for almost a year. In late autumn 2019 I asked a friend with not only access to spectrum analyser but primarily with knowledge and expertise of using it to measure properties of five various antennas I acquired during that year mainly for experimental purposes. I have been carrying the charts presented below in my backpack for most of this time and now it is finally a good moment to share those measurement results with you.

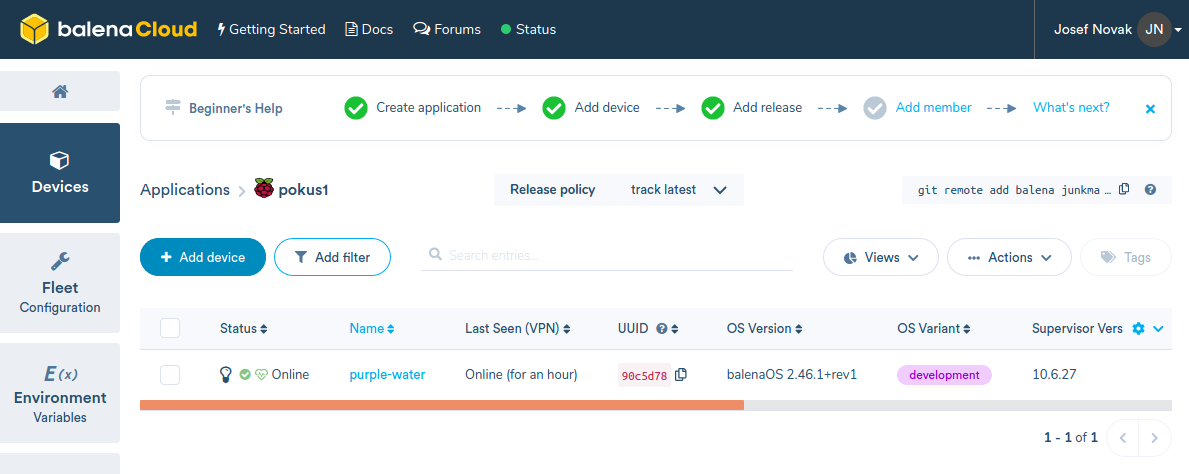

Antenna #1

This is the first and initial antenna which has been used with the CUBE ONE trackers. It took me some time to find a perfect match which would fit behind the canopy without drilling a hole into the hood. I had discovered this one on the Ampér 2018 eletronics fair that used to take place every April in the Brno exhibition centre. For long time I was not certain what the company name was. I just found out it was Sectron and the antenna type was AO-AGSM-TG09.

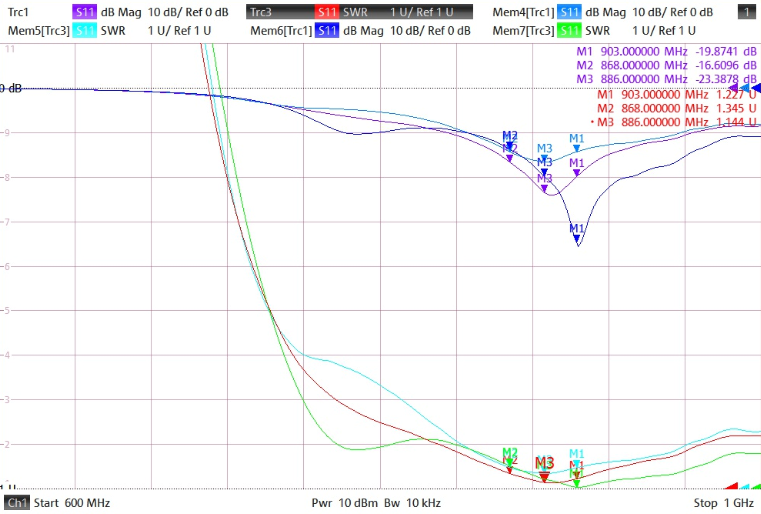

It was love at the first sight! Tiny dimensions, SMA connector, specifically designed for the 868MHz band and the ability to bend which made it so perfect. It works considerably well with metallic counterpoise which in CUBE ONE was located inside the box right under the PCB. Surprisingly, it performs rather well in air-to-ground traffic, despite the measurement result. Well, see for your self:

The M1 marker is around 730MHz while the frequency band we require (868MHz) is way out of the valley – find the M2 markers on the right. Also its configuration has a huge impact on its performance – the RED line is when the antenna is straight, light-blue when bent in 90 degrees and the bottom green one depicts its properties with a screwdriver in hand touching the outer SMA connector’s ring and making it almost well tuned. Nice but obviously for children to play as commented by Zdenek.

Antenna #2

Why and how did I find this gemstone? Mainly because the CUBE TWO needed one and as I might have mentioned earlier I had just a fuzzy idea what company I purchased the first ones. It took some time but after endless nights spent with comparing and contrasting various types, sizes, brands and even colours I discovered the 2J010 from SOS Electronic .. which seems to be also out of stock at this moment .. 🙁

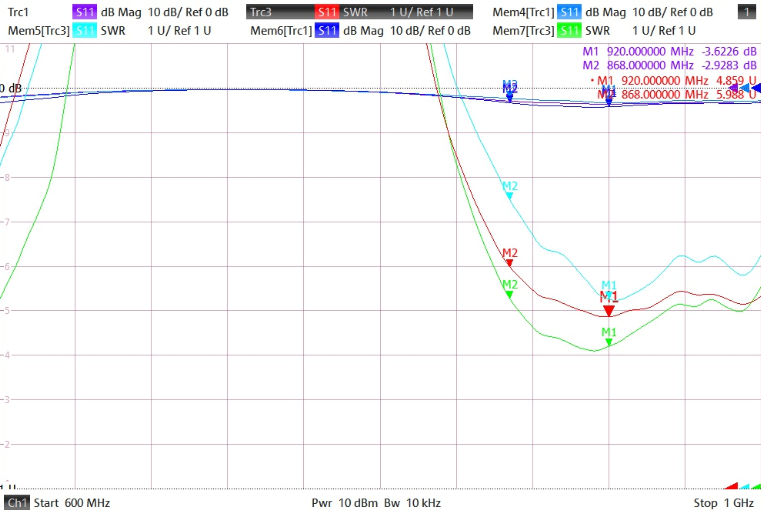

This story is still at its very beginning and I am already going to spoil the drama – this antenna performs the best from all here presented ones. It performs very nicely at 868MHz – see the markers M2 and M3 (-16dB or -23dB respectively). The M1 is set at 903 MHz. The bottom green line is again with a screwdriver touching the antenna and its influence is negligible. This antenna has the really best adjustment. It allows you to see other gliders at distance 5-7km all around you (well, this number hugely depends on mutual attitude and position of the gliders, of course).

Antenna #3

Another specimen with a bendable joint. It looks like a wifi-piece but is not. Its performance is low. Attaching a screwdriver to the ground had not much of effect but significant at the joint. Even worse properties were observed when a hand was in close proximity to the antenna – rendering it rather useless. Next!

Antenna #4

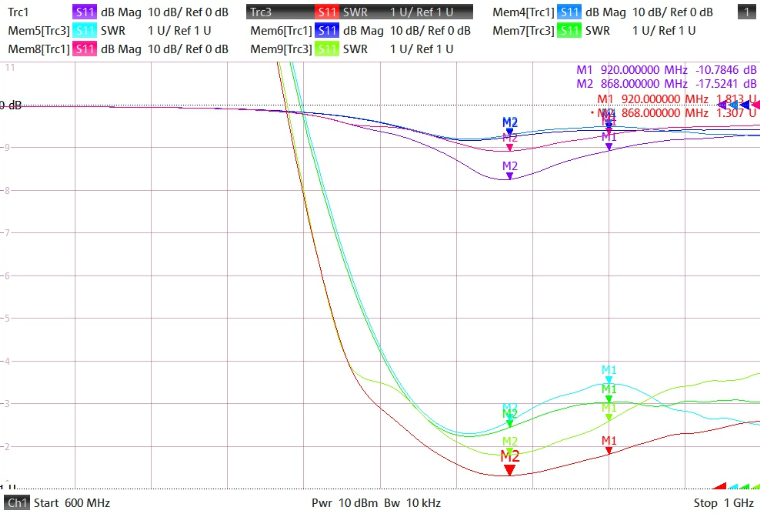

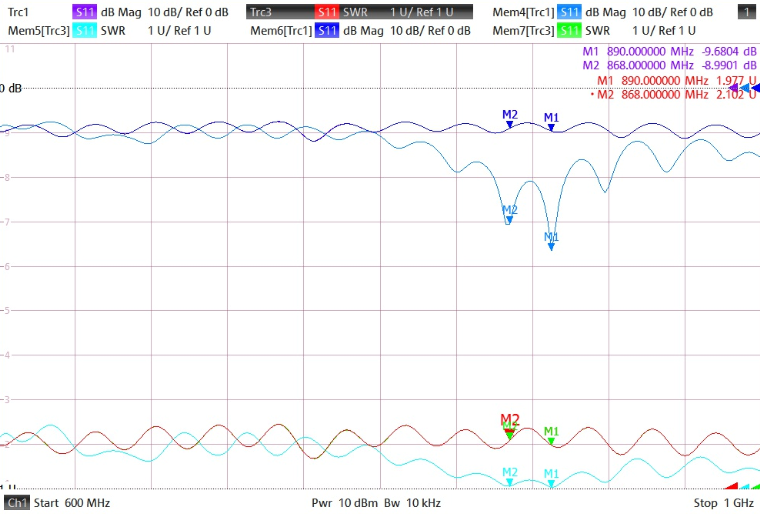

My personal favourite. It allowed the longest air-to-air distance I have encountered during the last gliding season – 23km! And that is only the farthest glider I have noticed in a moment of playing with the LK8000. And there might have been even longer distances! (it could be a good idea to add this information to the flight record..) Furthermore, it seems to to receive reliably other gliders at distance of 5-10km which makes it better even than the antenna #2. However, it is a bit bulky and kinda pain to fit it inside the tight cabin of LS-1. But with LS-8 it won’t be better 😉

Screwdriver in vicinity it had no influence whatsoever. Not even a hand around its root and all around the body. Its adjustment to the 868 MHz band is a bit worse but it has certainly better gain than #2. Marker M2 at 868 and M1 at 920 MHz.

Similarly to #3, the #4 has has been sourced from China which makes it with a nicer price tag (but who knows what will happen with shipping and taxes in 2021?) but with the sour uncertainty of repeatability of such purchase. Some other pieces I had bought were completely out of specs and violent manual adjustments had to be performed before throwing them to the garbage bin. This buy might have been pure luck but also a good source of nice antennas! Who knows? But I love it! 🙂

Antenna #5

I spotted similar kind of antenna at the T-Cup 2019 in Káďa’s Cirrus. It seemed to be a good idea to use one (or two sector) antennas to scan the airspace in front of you with higher precision – not only to detect possible incursions but also to spot other contestant’s thermalling on the track before you. Her antenna was apparently custom-build piece of PCB (I had a photo.. somewhere) and I also wanted to explore this area a bit. For that reason I decided to obtain a completely different antena ‘architecture’ including a 2m long cable.

The measurement has shown it is adapted somewhere for the 900 MHz band. The cable, however, influences its properties significantly and even more do the dimensions and material of the body it is located on. Dead end.

Summary

The antenna #2 seems to have the best adjustment for the band we need for our OGN adventures while the #4 seems the provide the best air-to-air range. Both of them can provide pretty decent service in terms of range and reliability of data reception. Hence both of them shall and will be considered for the upcoming seasons 🙂 As the #2 is not available at this moment I might try to buy more samples of #4 and check if all of them have the same or similar properties like the single sample I have at hand. Still, buying stuff from China is a tricky business. Either way I will certainly share the outcomes once available! 🙂